If I have 3 million customers on the web, I should have 3 million stores. - Amazon

Already, 35 percent of what consumers purchase on Amazon and 75 percent of what they watch on Netflix come from product recommendations based on such algorithms. - McKinsey

Recommender systems (recsys) are an extremely valuable problem to solve in machine learning. They are more a class of techniques rather than a specific model, but important enough to warrant their own section.

Recsys in Marketing | Recsys in Push Notifications | Art of the Problem | Spencer Pao | TensorFlow | Eugene Yan

What are the three ways to approach Recommender Systems?

Collaborative Filtering

- Leverages user/item ratings to make guesses about the similarity of users-users or items-items, and recommend products accordingly.

- Can be user-based or item-based.

Content Based

- Is based on the intrinsic qualities of content you are recommending (movies, songs, products)

- E.g, Spotify might recommend you songs that sound similar.

Deep Learning Approaches

- Deep learning models uncover complex, abstract patterns in user data, revealing nuanced preferences and behaviors.

- They are capable of finding relationships and hidden features in large datasets, beyond the reach of traditional algorithms.

Note: most modern recommender systems will have multiple connected components.

Explain the core logic behind item and user based collaborative filtering

Why it's 'collaborative'-'filtering'

- Collaborative: The underlying assumption is that if a group of users agreed in their evaluation of certain items in the past, they will likely agree again in the future. This collaboration is implicit in the data collected from various users.

- Filtering: The term ‘filtering’ is used because the system essentially filters through a large volume of data to make personalized recommendations for an individual user. It sifts through the preferences of many users to predict what a particular user might like.

Item based

- If items A and B were rated similarly by many users, then they are similar, and therefore someone who likes item A will probably also like item B.

User based

- If users A and B rated items similarly in the past, they will have similar preferences in the future.

↳ Can you compare and contrast item vs user based collaborative filtering?

-

Scalability and Performance:

- User-Based can be more computationally intensive, as it requires calculating user-user similarities which are very dynamic (change frequently).

- Item-Based often scales better with a large user base, as the item-item similarity matrix is generally more stable and changes less frequently than user-user similarities.

-

Recommendation Diversity:

- User-Based might offer more diverse recommendations since it’s based on the varied interests of similar users.

- Item-Based can sometimes lead to more narrow recommendations as it focuses on similarity to specific items.

-

Applicability:

- User-Based is often preferred in environments where user preferences are more important than the items’ specific attributes (like in social media or content platforms).

- Item-Based is effective in scenarios where item characteristics are more influential, like in e-commerce or media streaming services.

-

Sparcity:

- User-Based has sparsity problems, since the amount of rating data on a per-user level is likely to be small. This also relates to the cold start problem.

- Item-Based does not have the same sparsity problem, since each item it likely to have a lot of associated ratings, unless it is a completely new item.

Let’s consider a hypothetical scenario: You are building a recommender system for a new online bookstore.

In this scenario, item-based collaborative filtering would likely be the preferred technique. Here’s why:

- Stable Item Attributes: Books have well-defined and stable attributes (genre, author, etc.), making it easier to find similarities between them.

- Long-Tail Content: Bookstores often have a wide variety of books, including less popular (long-tail) items. Item-based filtering can help surface these niche books based on their similarity to popular titles.

- Changing User Preferences: Users’ reading preferences can change over time, but the nature of books remains constant. Item-based filtering can more reliably suggest books similar to what a user currently enjoys.

- Scalability: As the user base grows, maintaining an item-item similarity matrix is more manageable than constantly updating a user-user similarity matrix.

In summary, item-based collaborative filtering is typically more suitable for product-centric environments like online bookstores, where the attributes of the items play a crucial role in recommendations. User-based collaborative filtering, on the other hand, excels in scenarios where understanding and leveraging user community behavior is more relevant.

Naughty Calendars™️ is a new company specializing in selling a wide range of inappropriate calendars. Which collaborative recsys would be preferred here?

In this scenario, the choice between item-based and user-based collaborative filtering could go either way, depending on specific business goals and customer behavior. However, user-based collaborative filtering might be slightly more advantageous. Here’s why:

-

Unique and Niche Interests: Given the unique and potentially niche nature of the product (naughty-themed calendars), user preferences can be quite varied and specific. User-based filtering can leverage the similarities in users’ purchasing behaviors to recommend calendars that others with similar tastes have appreciated.

-

Community and Shared Interests: The product range suggests a certain sense of community or shared humor among customers. User-based filtering can tap into these communal preferences, recommending products that have appealed to similar users.

-

Dynamic and Seasonal Trends: The popularity of certain calendar themes may vary with seasons or trends. User-based filtering can quickly adapt to these changes by observing shifts in user preferences.

-

Engagement and Cross-Selling: For a business like “Naughty Calendars”, engaging customers and encouraging them to explore different themes is key. User-based collaborative filtering can introduce customers to calendars that they might not have discovered on their own but have been popular among users with similar tastes.

Let’s say a company Spodcast™️ has been operating for 4 months, and has constructed the following user x item matrix. On Spodcast™️, users rate podcasts on a scale of 1-5. Please walk me through the process of predicting user 3’s rating of podcast 1.

The value at represents user ’s rating of podcast .

Step 1

The first step is to understand the similarity of podcast to every other podcast based on their ratings.

To illustrate, let’s just focus on the similarity between and .

All we need to do is impute the missing values. Let’s just use the average. Now our column vectors representing podcasts 1 and podcasts 2 look like this:

Step 2

To calculate similarity, we use the inverse of distance.

To find , we can employ any of the dozens of techniques we know for finding the similarity between two vectors. For now, let’s just use the Euclidian distance.

For our vectors and , this means:

This leaves us with the following similarity matrix (if we were to reapply this step for all podcasts ). Note that we would normally get a full, square, similarity matrix, but in this case we’re only interested in the similarities for podcast 1.

Podcast 1 Similarities

User 3’s ratings

###### Step 3

Now that we’ve used ’collaborative’ techniques (aggregating everyone’s ratings) to calculate item-item similarities, it’s time to filter.

The aim is to ‘predict’ the rating for podcast 1 by taking the weighted average of user 3’s existing ratings, weighted by podcast 1’s similarities. This is the part where most people stuff up - you must only include user 3’s existing, known ratings in the weighted average, meaning some of the similarities will be discarded.

This yields user 3’s predicted rating of podcast 1. The next step would be to repeat this process for all podcasts that they had not yet listened to (starting from step 1), then recommend based on the ‘top k’ predicted ratings.

How could you combat the sparse matrix problem by exploiting linear algebra?

Read about the really cool Matrix Factorization technique that was found, here.

Something where the answer is offline calculating and clustering or something

What are some common weaknesses of recommender systems?

- Interpretability

- Rare items are ignored

- Oppositely, over recommendation can occur

- Cold start problem.

- Sparcity in the

userxitemmatrix

↳ Explain how you could combat the cold start problem

The cold start problem refers to the difficulty of making accurate recommendations when there’s insufficient data on new users or items.

- Content-Based Filtering: Use attributes of items or users to make recommendations. For new items, analyze their features (like genre, author, or specifications) and recommend them to users with similar interests. For new users, ask them to provide preferences during sign-up.

- Hybrid Systems: Combine collaborative and content-based filtering to mitigate the lack of data. This approach can utilize the available content data when collaborative data is scarce.

- Use of Demographic Data: Utilize demographic information of new users (such as age, location, or occupation) to make initial recommendations based on the preferences of similar demographic groups.

- User Onboarding Process: Encourage new users to rate items they’ve already experienced or select preferences during sign-up, providing initial data for the recommendation algorithm.

- Transfer Learning: Use data or models from a similar domain or task where data is abundant to jump-start the recommender system in a new domain.

↳ Explain how you could address the over-recommending problem:

- Diversity Enhancement: Intentionally include diverse and novel items in the recommendation list, even if they have a slightly lower predicted rating.

- Exploration vs. Exploitation: Implement algorithms that balance exploitation (recommending items with high predicted relevance) with exploration (trying out less certain recommendations to discover new preferences).

- Long-Tail Promotion: Include long-tail items (less popular or niche items) in recommendations to provide a broader range of choices.

- User Feedback Mechanism: Allow users to give feedback on recommendations (like “I don’t like this” or “Show me more like this”), and adjust future recommendations accordingly.

- Frequency Capping: Limit how often a particular type of item is recommended within a given timeframe.

- Temporal Dynamics: Take into account changes in user preferences over time and adapt recommendations accordingly.

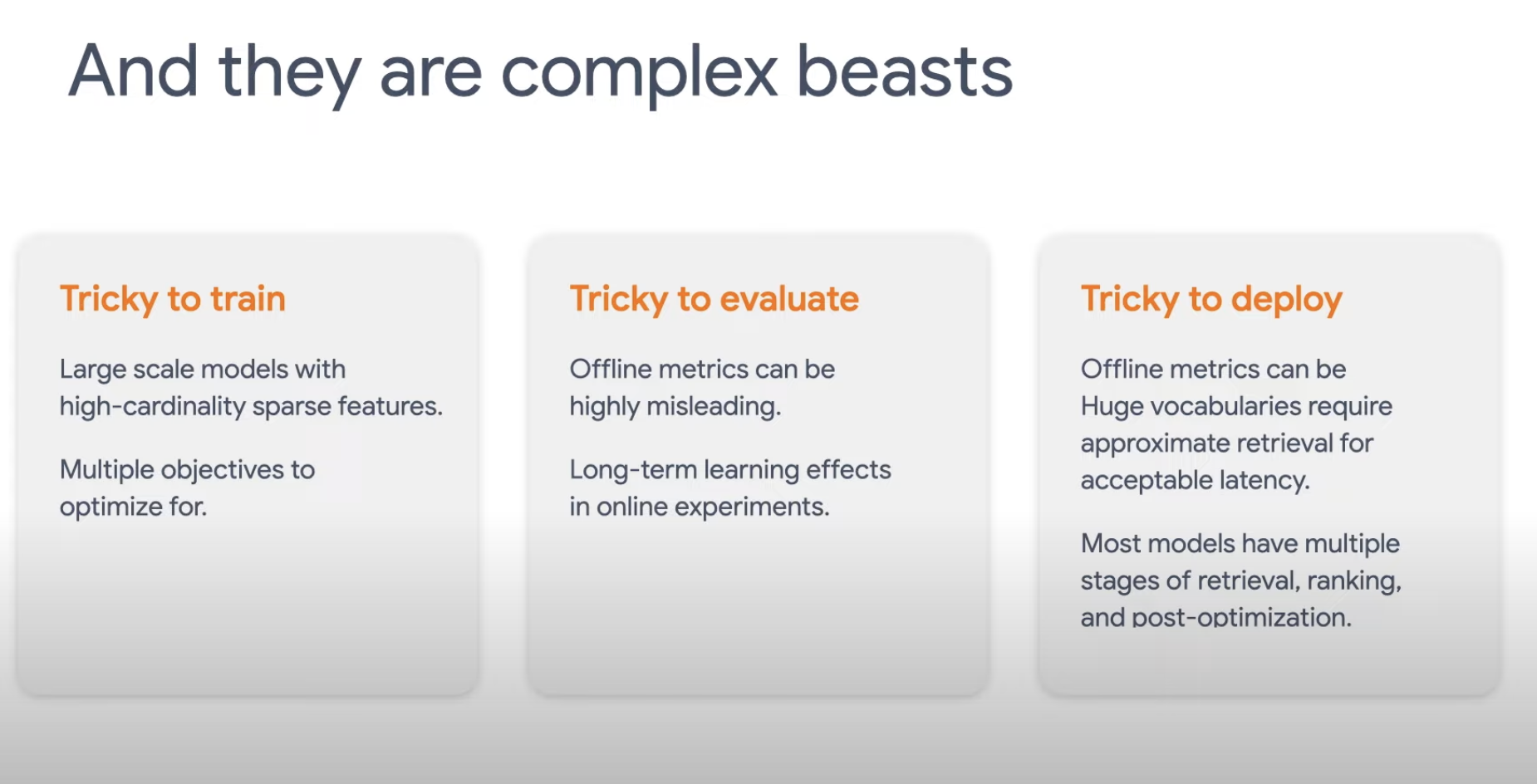

What makes recommender systems challenging to deploy?

Why might a recommender system not be performing as well as initially intended?

Cold Start Problem

- Recommending items for new users or recommending new items to users can be challenging without sufficient data.

- An

itemxusermatrix for a just-launched platform might look like this:

Why might a recommender system not be performing as well in the long term?

Diversity vs Accuracy

Over-optimizing for accuracy can lead to a lack of diversity in recommendations.

- Changing User Preferences: Users’ preferences can evolve over time, making old data less relevant. This is related to model drift.

- Scalability Issues: Handling recommendations for a vast number of users and items in real-time can be computationally challenging.

- Feedback Loops: If the system continuously recommends a certain type of content, users might only interact with that, leading to biased future recommendations.

- Popularity Bias: Recommender systems might overly focus on popular items, overshadowing niche ones.

How do you evaluate the effectiveness of recommender systems post-deployment?

Enhance accuracy and relevance of recommendations by deeply understanding user profiles and item characteristics.

Overlaps with this question about how to monitor model performance in general: go here.

-

Accuracy Metrics:

- Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE): These metrics measure the average magnitude of the errors in a set of predictions, without considering their direction. Lower values indicate better accuracy.

- Precision and Recall: Precision measures the proportion of recommended items that are relevant, while recall measures the proportion of relevant items that are recommended.

- F1 Score: The harmonic mean of precision and recall, providing a single metric that balances both.

- Read about a commonly used by tricky metric, mean average precision, here. (Write a python function to implement mean average precision metric.)

-

Diversity and Novelty:

- Diversity: Evaluates how varied the recommendations are. A good recommender system should not always suggest similar items.

- Novelty: Assesses how new or unexpected the recommendations are to a user. Recommending only popular items might not always be the best strategy.

-

Coverage:

- Catalog Coverage: Refers to the percentage of items in the catalog that are recommended across all users.

- User Coverage: Measures the proportion of users for whom the system can generate recommendations.

-

Ranking Metrics:

- Normalized Discounted Cumulative Gain (NDCG): Measures the quality of the ranking of the recommended items, considering the position of relevant items in the list.

- Mean Reciprocal Rank (MRR): Focuses on the rank of the first relevant recommendation.

-

Utility-based Metrics:

- These metrics are based on the utility or satisfaction a user gets from the recommendations, which might include aspects like relevancy, timeliness, or personalization.

-

A/B Testing:

- In real-world scenarios, A/B testing is often used where one group of users is given recommendations based on the new model and another group based on the old model. User engagement and satisfaction are then compared.

-

User Studies and Surveys:

- Direct feedback from users about the relevance and usefulness of the recommendations can be invaluable.

-

Long-Term Evaluation:

- Observing how the effectiveness of a recommender system evolves over time, especially in adapting to changes in user preferences.

Certainly, evaluating the effectiveness of recommender systems once they have been deployed is crucial for understanding their real-world performance and impact. Here’s how I would approach this:

-

Monitoring Key Performance Indicators (KPIs):

- User Engagement Metrics: Track metrics like click-through rates, conversion rates, average time spent on the platform, and repeat usage. These indicators often reflect how well the recommendations align with user interests.

- Revenue Metrics: For commercial applications, measuring the impact on sales, average order value, and overall revenue can indicate the effectiveness of the recommender system in driving business goals.

-

Online A/B Testing:

- Implement A/B testing by exposing different groups of users to different recommendation algorithms (or different configurations of the same algorithm) and measuring the difference in user response. This method provides direct feedback on the performance of the recommender system under real-world conditions.

-

User Satisfaction Surveys:

- Conduct surveys or collect direct feedback from users about their satisfaction with the recommendations. This qualitative data can provide insights that are not always apparent from quantitative metrics.

-

Long-Term User Behavior Analysis:

- Observe changes in long-term user behavior, like retention rates and lifetime value (LTV). A successful recommender system often leads to improved user retention and increased LTV.

-

Diversity and Serendipity:

- Evaluate how diverse the recommendations are and whether they include novel or unexpected items. This can be crucial for maintaining user interest over time.

-

Fairness and Bias:

- Monitor for any unintentional biases in recommendations, such as over-representing certain types of items or under-serving certain user groups. Ensuring fairness is key to the long-term success and acceptability of the system.

-

Scalability and Efficiency:

- Assess the system’s scalability and response time, especially under peak loads. The recommender system should be able to handle large volumes of requests efficiently.

-

Error Analysis:

- Conduct periodic error analysis to understand where and why the recommender system is failing. This can lead to targeted improvements in the algorithm.

-

Comparative Analysis:

- Compare the deployed system’s performance against baseline models or previous versions to assess relative improvements.

-

Compliance and Privacy:

- Ensure that the recommender system complies with privacy laws and regulations, particularly in how it uses user data.

Often, a combination of these methods is used for a comprehensive evaluation.

What is a strongly complement score?

↳ What is a complement score?

↳ What is a substitute score?

Do you know how to implement a recommender system using TensorFlow and Keras?

If no, go to the TensorFlow section of this book.